| EXCALIBUR Adaptive Constraint-Based Agents in Artificial Environments |

| [ORC QUEST REVISITED] | [Constraints] [Heuristics] [Global Search Control] [Evaluation] |

| [ Please note: The project has been discontinued as of May 31, 2005 and is superseded by the projects of the ii Labs. There won't be further updates to these pages. ] |

(Related publications: [PUBLink] [PUBLink])

The problem does not require an application of sophisticated heuristics. The improvement heuristics can be the same for the Performers, Pain and Orc constraint. To change the plan (i.e., the CSP), there are six modification alternatives:

For all six decision alternatives, there is a constraint-internal value that represents the preference for this alternative. All preference values are initially set to 1. If a constraint is called to effect an improvement of the plan, it increases an alternative's value by one if this alternative was chosen by the constraint's last improvement decision and the constraint's goal-/cost-function value is now better than the last time the constraint was called. If the goal-/cost-function value has deteriorated or is the same as last time, the preference value is decreased by two. Each time there is a consecutive deterioration, the decrease is doubled. However, no preference value can fall below one. Then, an alternative is chosen with a probability that is proportional to the alternative's preference value.

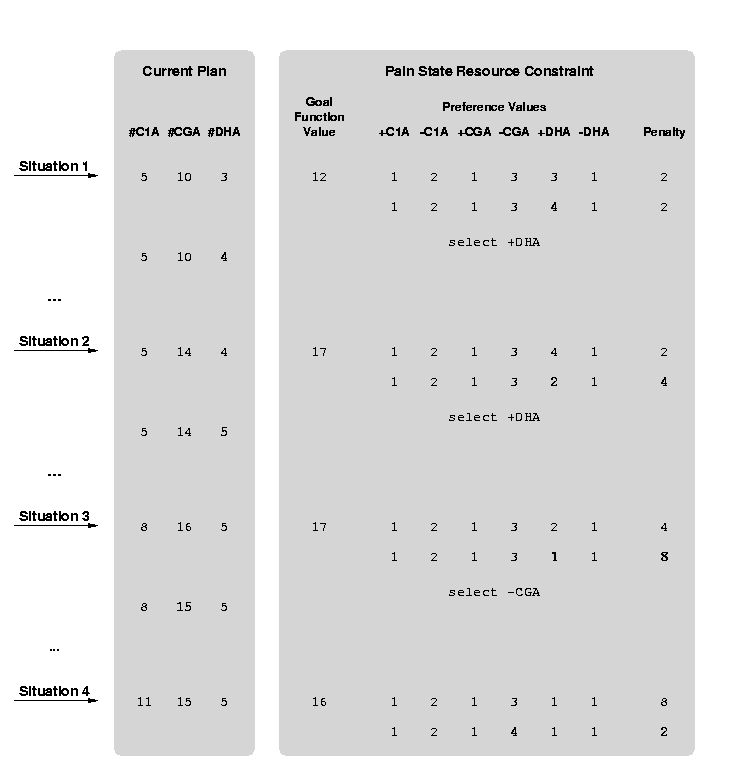

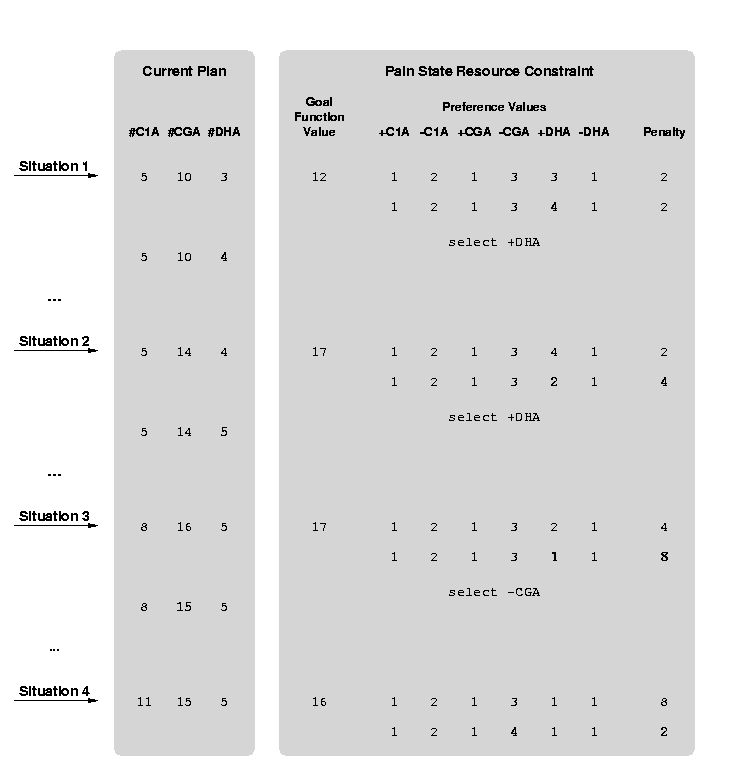

In the following, the heuristic is illustrated using the Pain State Resource Constraint. Consider a situation in which the current plan consists of 5 catch_only_one actions (C1A), 10 catch_a_group actions (CGA) and 3 deliver_humans actions (DHA) and the Pain constraint is chosen to perform a plan improvement (see Situation 1 in the figure below).

The Pain constraint's goal function calculates the sum of the Contribution variables of the linked state tasks. There are currently 5 linked state tasks with a Contribution of 1, 10 with a Contribution of 4, and 3 with a Contribution of -11. Thus, the constraint's goal function has a value of 5 × 1 + 10 × 4 + 3 × (-11) = 12. The current preference values of the constraint are given in the figure above (`+' means addition and `-' means deletion).

Let's assume that the constraint's goal function value is now better than the last time the constraint was called and that the constraint's last improvement decision was to add a deliver_humans action. Accordingly, this decision's preference value is rewarded by increasing it by one (next line in the figure above). Next, an alternative to improve the current situation is s elected. The choice probability for the +DHA option is the highest (preference value divided by the sum of all preference values; 4 ÷ 12 = 33%), and we assume that this alternative is chosen. The plan/CSP is changed according to this option.

After some iterations, the Pain constraint can be called again (see Situation 2 in the figure above). In the meantime, the plan has been changed and some more catch_a_group actions are added. The Pain constraint's goal function value has deteriorated since the last time the constraint was called and its last decision's preference value is therefore decreased by the penalty value of 2. In addition, the penalty value is doubled. The choice probability for the +DHA option is now only 2 ÷ 10 = 20%, but we assume that this alternative is chosen again. The plan is changed according to this option.

After some time, the Pain constraint is called once more (Situation 3). The constraint's goal function value is the same as at the last call. Stagnation is considered to be as bad as deterioration, and the +DHA option's preference value is therefore decreased by the penalty value of 4, and the penalty value is doubled again. However, the +DHA preference value is increased to its minimum of 1 to ensure that the option retains a chance of being chosen. We assume that the -CGA alternative is chosen this time (probability of 3 ÷ 9 = 33%). The plan is changed according to this option.

At the next call of the Pain constraint (Situation 4), the constraint's goal function value has improved, and so, the -CGA preference value is increased and the penalty value is set back to 2.

| [ORC QUEST REVISITED] | [Constraints] [Heuristics] [Global Search Control] [Evaluation] |

For questions, comments or suggestions, please contact us.

Last update:

May 20, 2001 by Alexander Nareyek